Modern AI timeline

Plotting some of the recent research and developments in artificial intelligence (2006 - 2023), focusing on cognitive computing - text, images, and solving complex problems

This is a graphic that plots some of the key developments and breakthroughs in AI since the beginning of the 21st century, focused on cognitive computing - language, vision, and complex problem-solving through game play.

I created the first version during my PhD (2016 - 2020) and have continued updating it to keep track of progress. This one covers some of the most significant developments through to the end of 2023.

It’s a useful reminder of just how far we have advanced.

Note, for this article, I am using AI as a general term covering the use of machine learning algorithms to make predictions and generate content.

TLDR version: We’ve had substantial breakthroughs in the design of algorithms that advance computer vision, language processing, and solving complex tasks. These are core cognitive capabilities that come easily to humans and that we did not expect to see machines capable of for another decade or more. Whilst 2024 will continue to experience a lot of hype around AI, and we will likely see some spectacular failures, we are at the start of a new era of AI-based innovations. As usual, people are overestimating what will be achieved in the short-term because, when it comes to real-world applications, it is never just about the technology. But change is coming…

2006 - 2015: advances in computer vision

Machine learning accelerated in popularity from the beginning of the 21st century, as new and larger digitised data sources became available to learn from, along with the development of open-source languages such as Python and R designed to analyse large data sets programmatically.

Much of the machine learning techniques used during the first decade were ‘classical’, and arguably could be called computational statistics. Classical machine learning can leverage massive data sets, but will reach a performance limit. After which, adding further data to the training dataset does not yield better predictions.

Neural networks offered an alternative approach. Commonly referred to as ‘deep learning’, algorithms built using neural networks continue to improve as more and more data is added. We have yet to reach a limit. The constraint is the compute resource and time to build such models.

In 2006, Geoffrey Hinton and a team of researchers published a breakthrough paper showing how a fast greedy algorithm could better leverage neural networks in machine learning, ‘A fast learning algorithm for deep belief nets’ (Hinton et al, 2006). Around the same time, researchers began exploring the use of the graphics processing unit (GPU) to parallelise compute activities. In 2009, ‘Large-scale deep unsupervised learning using graphics processors’ (Raina et al, 2009) was published.

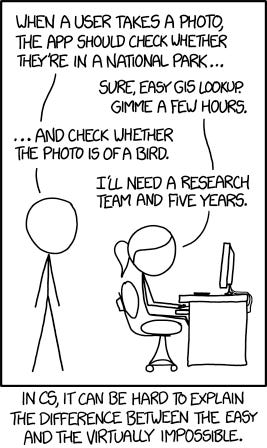

The combination of using GPUs and fast greedy algorithms to train neural networks led to a major breakthrough in computer vision. Before 2006, computer algorithms were limited to detecting objects in low-resolution images, with few practical applications. By 2012, algorithms were being trained on high-resolution images. But in September 2014, the comic XKCD was joking that object detection in photos was still an impossible task

Six months later, in February 2015, Microsoft researchers published a paper demonstrating that a computer algorithm had, for the first time, outperformed humans at object detection in photos (Microsoft, 2015).

In the same period, a new technique was published - Generative Adversarial Networks (Goodfellow et al, 2014) that reversed the computer vision process. Instead of detecting the content of an image, create an image based on prompts for what the image should contain…

But whilst computer vision advanced dramatically, natural language processing algorithms didn’t seem to benefit quite as much from these new methods.

2017 - 2022: advances in language models

In 2017, Google researchers published a paper, ‘Attention Is All You Need‘, describing a new model architecture for neural networks - the transformer. The concept was based on taking a more holistic approach to understanding language, to better understand which words in a sequence should be given the most attention when predicting the next word in the sequence. In 2018, Google released the first language model based on the transformer architecture - Bidirectional Encoder Representations from Transformers, aka BERT. Within a year, it became the new baseline standard for natural language processing tasks and introduced the era of the large language model (LLM).

The first version of BERT had 340 million parameters. A year later, in 2019, the recently formed OpenAI released its second Generative Pre-Trained Transformer model - GPT-2. The model had 1.5 billion parameters. As the size of the training data and models began to grow, researchers also began exploring methods to improve the efficiency of the training process. One promising publication was ‘The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks, ‘ (Frankle and Corbin, 2019). The authors went on to found MosaicML, to build LLMs more efficiently.

The size of LLMs has continued to grow. In 2020, OpenAI released GPT-3 with 175 billion parameters. Microsoft stepped in and licensed GPT-3 for exclusive use. A year later, Microsoft and Nvidia jointly released a 540 billion parameter model - Megatron-Turing NLG 530B. The supporting news released claimed that the LLM had achieved common-sense reasoning.

Also in 2021, computer vision and natural language processing began to converge into single ‘multi-modal’ models. OpenAI released the first version of DALL-E, a model that could generate images based on text prompts. The following year, it created headlines when a computer-generated image was awarded first place in an art competition.

2022 was the year when generative AI models became mainstream news. As well as the controversy surrounding a computer-generated artwork winning a competition, a Google engineer was dismissed after creating headlines claiming that a LLM in development at Google was sentient and may have its own feelings. And then, in November 2022, ChatGPT was launched. It became the fastest growing application in the history of the Internet, reaching 100 million users in just two months.

Throughout 2023, more LLMs have been developed. OpenAI launched GPT-4 but no longer shares details about its training or size. It is rumoured to have over 1 trillion parameters… But 2023 also saw the rise of open source models, initially limited to research and non-commercial uses due to restrictions on the training data sets. By July, a number of fully open source LLMs had been published, including Llama2 by Meta (Facebook). The focus also turned to making LLMs more efficient in cost and compute resources.

2015 - 2023: advances in complex problem solving

The third area of developments in cognitive computing involve leverage game play to train AI models to handle complex tasks. Prior to 2015, this area had been dominated by IBM. In 1997, IBM DeepBlue became the first machine to beat a human champion at chess, Gary Kasparov. In 2011, IBM Watson became the first computer to beat humans at the TV quiz show Jeopardy. But from 2015, Google DeepMind has dominated the headlines with their Alpha series of models using neural networks.

In 2015, AlphaGo won against champion human players at the game Go, a game far more complex than Chess. In 2017, its successor AlphaGo Zero, beat the number 1 player in the world Lee Sedol who retired the following year citing the ascendency of AI as one of the reasons.

“With the debut of AI in Go games, I’ve realized that I’m not at the top even if I become the number one through frantic efforts. Even if I become the number one, there is an entity that cannot be defeated.” - Lee Sedol

The next iteration, AlphaZero, went even further. Whilst AlphaGo was trained on data from previous games, AlphaZero taught itself from scratch using reinforcement learning. It was not provided with any training data. Instead, it generated its own data as it learned by playing games against itself and against the best-performing predecessor, AlphaGoZero. It took just 34 hours to surpass AlphaGoZero at Go.

In 2018, Google DeepMind entered a new algorithm, AlphaFold, in the bi-annual protein folding competition CASP. Protein folding was considered one of the great unsolved problems in biology. AlphaFold took first place, outperforming all humans with an average score of 54.9%. In 2020, the next iteration of AlphaFold again took first place in CASP. This time with an average score of over 90%!

In 2022, AlphaTensor was launched, targeted matrix multiplication, a maths-intensive process that underpins the performance of many software programs and computer algorithms, including language models. And in 2023, a paper introduced AlphaDev, a model intended to find faster algorithms in computer science. The paper described how it had discovered a faster sorting algorithm, another process that underpins many software programs and computer algorithms.

AlphaFold, AlphaTensor and AlphaDev have all followed the approach adopted with AlphaZero - learning from scratch without any prior data, using only reinforcement learning.

Reinforcement learning (RL) also plays a central role in the development and tuning of large language models. However, LLMs also include human feedback in the process (RLHF) and are trained on existing data. For now at least. As data sources become harder to acquire and incorporate in training, it will be interesting to see what new developments emerge in 2024 and beyond…

References

The following are references for all data points on the AI timeline

-Geoffrey E. Hinton, Simon Osindero, and Yee-Whye Teh. 2006. A fast learning algorithm for deep belief nets. Neural Comput. 18, 7 (July 2006), 1527–1554. https://doi.org/10.1162/neco.2006.18.7.1527

-Rajat Raina, Anand Madhavan, and Andrew Y. Ng. 2009. Large-scale deep unsupervised learning using graphics processors. In Proceedings of the 26th Annual International Conference on Machine Learning (ICML '09). Association for Computing Machinery, New York, NY, USA, 873–880. https://doi.org/10.1145/1553374.1553486

-IBM computer wins Jeopardy clash (2011) - https://www.theguardian.com/technology/2011/feb/17/ibm-computer-watson-wins-jeopardy

-Krizhevsky, Sutskever and Hinton (2012) - ImageNet Classification with Deep Convolutional Neural Networks. Part of Advances in Neural Information Processing Systems 25 (NIPS 2012)https://proceedings.neurips.cc/paper_files/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf

-Goodfellow, et al (2014) - Generative Adversarial Networks https://arxiv.org/abs/1406.2661 (June 2014)

-TensorFlow - Google’s latest machine learning system, open sourced for everyone - https://ai.googleblog.com/2015/11/tensorflow-googles-latest-machine.html (November 2015)

-AlphaGo wins for first time - https://www.deepmind.com/research/highlighted-research/alphago#:~:text=In%20October%202015%2C%20AlphaGo%20played,a%20score%20of%205%2D0. (October 2015)

-Microsoft Researchers’ Algorithm Sets ImageNet Challenge Milestone - https://www.microsoft.com/en-us/research/blog/microsoft-researchers-algorithm-sets-imagenet-challenge-milestone/ (February 2015)

-Attention Is All You Need, by Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, Illia Polosukhin, Part of Advances in Neural Information Processing Systems 30 (NIPS 2017) - https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf

-Google’s AlphaGo Defeats Chinese Go Master in Win for A.I. - https://www.nytimes.com/2017/05/23/business/google-deepmind-alphago-go-champion-defeat.html (May 2017)

-BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding, Jacob Devlin and Ming-Wei Chang and Kenton Lee and Kristina Toutanova. https://arxiv.org/abs/1810.04805v1 (v1 2018, v2 2019) + Wikipedia page https://en.wikipedia.org/wiki/BERT_(language_model)

-Senior, A.W., Evans, R., Jumper, J. et al. Improved protein structure prediction using potentials from deep learning. Nature 577, 706–710 (2020). https://doi.org/10.1038/s41586-019-1923-7 and https://www.deepmind.com/blog/alphafold-a-solution-to-a-50-year-old-grand-challenge-in-biology

-Announcing PyTorch 1.0 for both research and production, by Bill Jai https://engineering.fb.com/2018/05/02/ai-research/announcing-pytorch-1-0-for-both-research-and-production/ (May 2018)

-The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks, Jonathan Frankle and Michael Carbin,https://arxiv.org/abs/1803.03635 (v1 2018, public release 2019)

-GPT-2: 1.5B release - https://openai.com/research/gpt-2-1-5b-release (Nov 2019) and original GPT-2 release - https://openai.com/research/better-language-models (Feb 2019) and code - https://github.com/openai/gpt-2

-GPT3 175bn parameter release - https://developer.nvidia.com/blog/openai-presents-gpt-3-a-175-billion-parameters-language-model/ (July 2020) and https://venturebeat.com/ai/openai-debuts-gigantic-gpt-3-language-model-with-175-billion-parameters/ (May 2020)

-Microsoft exclusively licenses GPT-3 - https://www.theverge.com/2020/9/22/21451283/microsoft-openai-gpt-3-exclusive-license-ai-language-research (Sept 2020) and https://blogs.microsoft.com/blog/2020/09/22/microsoft-teams-up-with-openai-to-exclusively-license-gpt-3-language-model/ (Sept 2020)

-Jumper J, Evans R, Pritzel A, Green T, Figurnov M, Ronneberger O, Tunyasuvunakool K, Bates R, Žídek A, Potapenko A, Bridgland A, Meyer C, Kohl SAA, Ballard AJ, Cowie A, Romera-Paredes B, Nikolov S, Jain R, Adler J, Back T, Petersen S, Reiman D, Clancy E, Zielinski M, Steinegger M, Pacholska M, Berghammer T, Silver D, Vinyals O, Senior AW, Kavukcuoglu K, Kohli P, Hassabis D. Applying and improving AlphaFold at CASP14. Proteins. 2021 Dec;89(12):1711-1721. doi: 10.1002/prot.26257. PMID: 34599769; PMCID: PMC9299164. https://pubmed.ncbi.nlm.nih.gov/34599769/

-Using DeepSpeed and Megatron to Train Megatron-Turing NLG 530B, the World’s Largest and Most Powerful Generative Language Model - https://www.microsoft.com/en-us/research/blog/using-deepspeed-and-megatron-to-train-megatron-turing-nlg-530b-the-worlds-largest-and-most-powerful-generative-language-model/(October 2021)

-DALL-E: creating images from text - https://openai.com/research/dall-e (January 2021)

-OPT: Open Pre-trained Transformer Language Models, Susan Zhang and Stephen Roller and Naman Goyal and Mikel Artetxe and Moya Chen and Shuohui Chen and Christopher Dewan and Mona Diab and Xian Li and Xi Victoria Lin and Todor Mihaylov and Myle Ott and Sam Shleifer and Kurt Shuster and Daniel Simig and Punit Singh Koura and Anjali Sridhar and Tianlu Wang and Luke Zettlemoyer. https://arxiv.org/abs/2205.01068

-An A.I.-Generated Picture Won an Art Prize. Artists Aren’t Happy. https://www.nytimes.com/2022/09/02/technology/ai-artificial-intelligence-artists.html (Sept 2022)

-Google engineer says Lamda AI system may have its own feelings - https://www.bbc.com/news/technology-61784011 (June 2022)

-Fawzi, A., Balog, M., Huang, A. et al. Discovering faster matrix multiplication algorithms with reinforcement learning. Nature 610, 47–53 (2022). https://doi.org/10.1038/s41586-022-05172-4 and https://www.deepmind.com/blog/discovering-novel-algorithms-with-alphatensor (Oct 2022)

-Introducing ChatGPT - https://openai.com/blog/chatgpt (November 2022)

-GPT-4 - https://openai.com/research/gpt-4 (March 2023)

-Introducing LLaMA: A foundational, 65-billion-parameter large language model - https://ai.meta.com/blog/large-language-model-llama-meta-ai/ (Feb 2023) – was open source for research and non-commercial only

-Meta and Microsoft introduce the next generation of Llama - https://ai.meta.com/blog/llama-2/ (18 July 2023) – open source for research and commercial use

-MosaicML MPT-7B - https://www.mosaicml.com/blog/mpt-7b (May 2023) and MPT-30b - https://www.mosaicml.com/blog/mpt-30b (June 2023)

-Mankowitz, D.J., Michi, A., Zhernov, A. et al. Faster sorting algorithms discovered using deep reinforcement learning. Nature 618, 257–263 (2023). https://doi.org/10.1038/s41586-023-06004-9 and https://www.deepmind.com/blog/alphadev-discovers-faster-sorting-algorithms (June 2023)